What is Cloudflare?

- Overview of Cloudflare

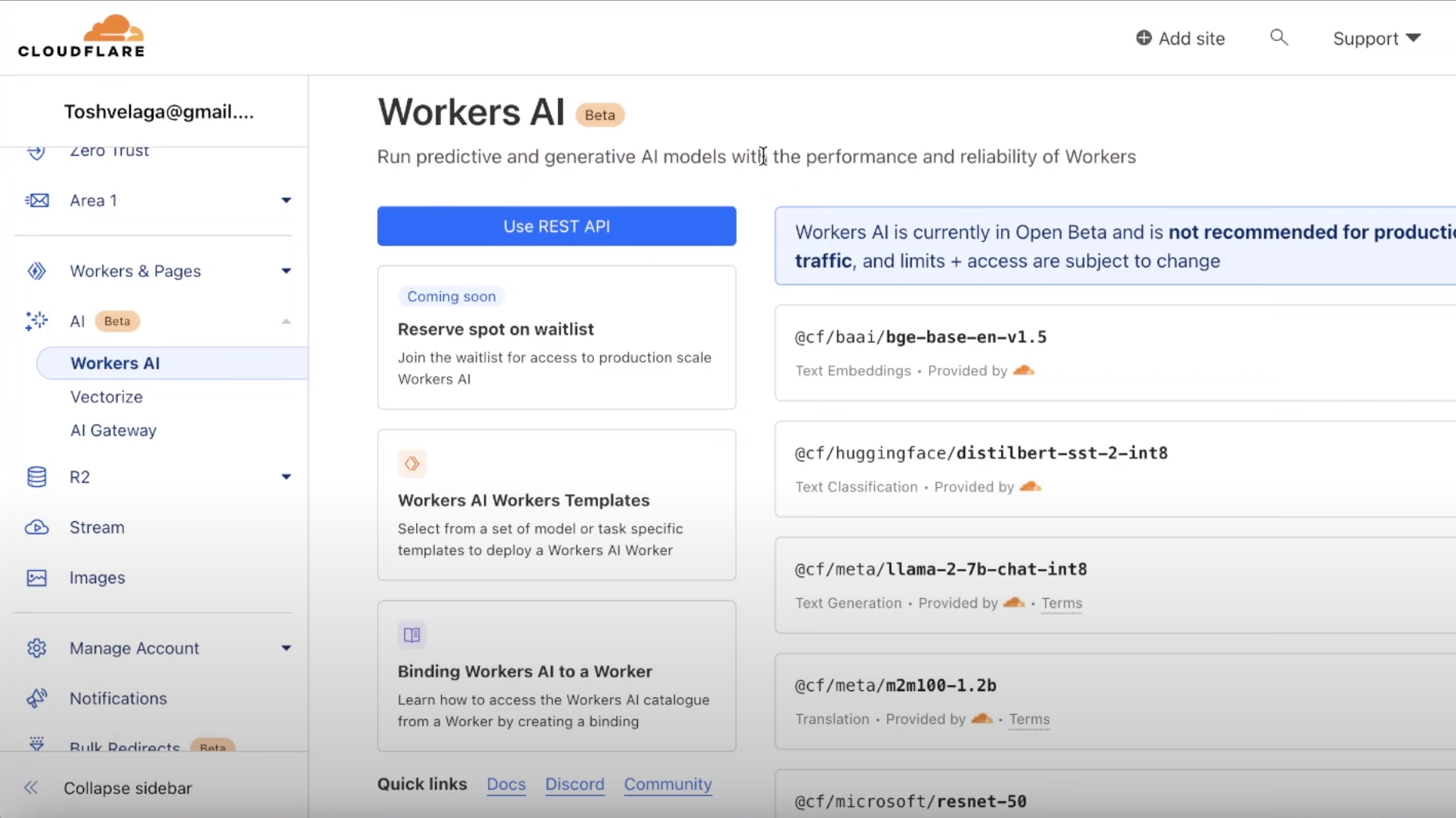

Utilize Cloudflare’s global network to build better assistive and generative AI apps.

With Cloudflare’s AI products, you can build full-stack, AI powered applications or run fast, low-latency inference tasks on pre-trained machine learning models with Cloudflare Workers. You can also view and manage your AI applications or use WebGPU in Durable Objects.、

Observe and control your AI applications.

Cloudflare’s AI Gateway allows you to gain visibility and control over your AI apps. By connecting your apps to AI Gateway, you can gather insights on how people are using your application with analytics and logging and then control how your application scales with features such as caching, rate limiting, as well as request retries, model fallback, and more. Better yet – it only takes one line of code to get started.

Key features include:

- Analytics: View metrics such as the number of requests, tokens, and the cost it takes to run your application

- Logging: Gain insight on requests and errors

- Caching: Serve requests directly from Cloudflare’s cache instead of the original model provider for faster requests and cost savings

- Rate limiting: Control how your application scales by limiting the number of requests your application receives

- Request retry and fallback: Improve resilience by defining request retry and model fallbacks in case of an error

- Support for your favorite providers: Workers AI, OpenAI, HuggingFace, Replicate all work with AI Gateway (more to come)

- Response streaming : AI Gateway supports response streaming

Check out the Get started guide to learn how to configure your applications with AI Gateway.

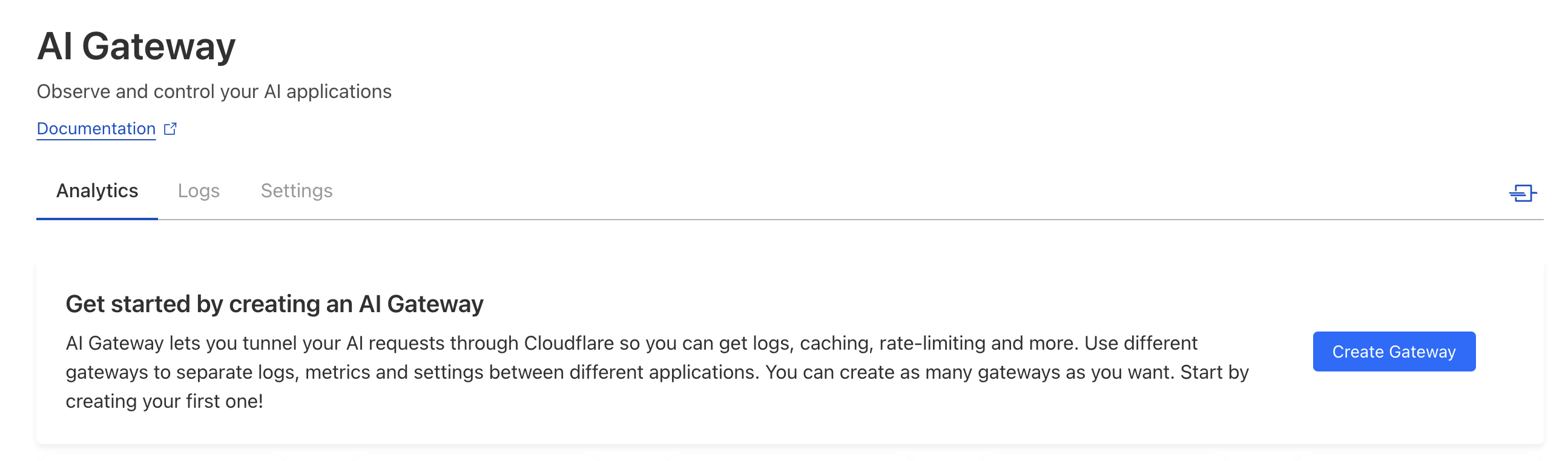

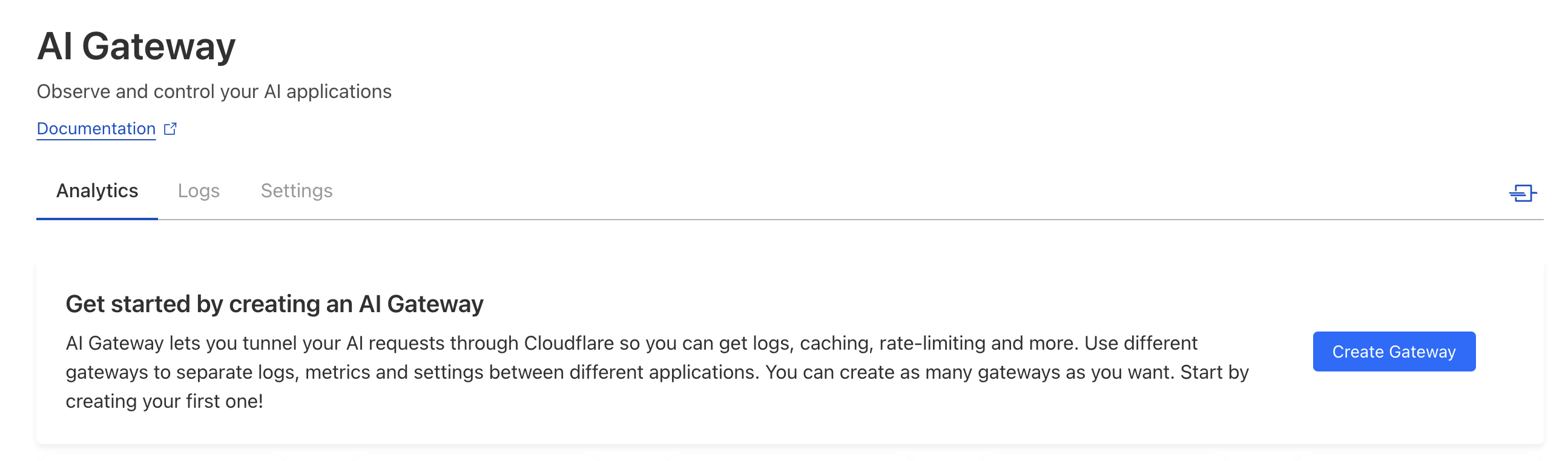

Creating your first AI Gateway

In this guide, you will learn how to create your first AI Gateway. You can create multiple gateways to control different applications.

To set up an AI Gateway you will need to:

- Create a Cloudflare accountOpen external link

- Navigate to AI GatewayOpen external link in the dashboard, under the AI menu

- Create a new AI Gateway and enter your gateway name and URL slug

Connecting your application

In this guide, you will learn how to connect your application to your AI Gateway. You will need to have an AI Gateway created to continue with this guide.

Once you have configured a Gateway in the AI Gateway dashboard, click on “API Endpoints” to find your AI Gateway endpoint. AI Gateway offers multiple endpoints for each Gateway you create – one per provider and one universal endpoint.

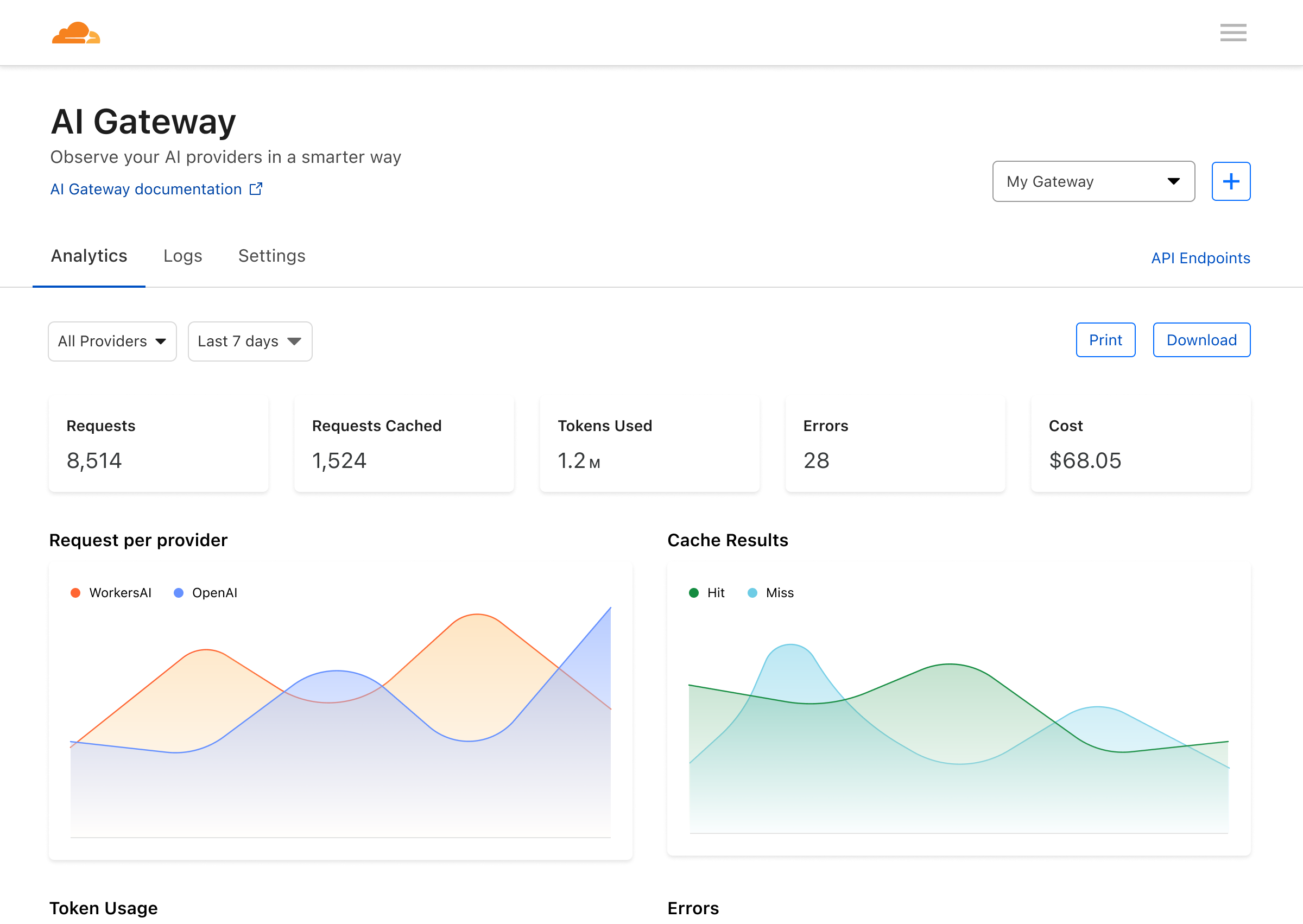

Observe and control

Now that your application is connected to the AI Gateway, you should be able to see see requests coming in through your Cloudflare Dashboard – AI GatewayOpen external link. This guide shows you what data you can expect to see and what settings to configure for better control.

Analytics

On the first page of your AI Gateway dashboard you’ll see metrics on requests, tokens, caching, errors, and cost. You can filter these metrics by time and provider-type.

The cost metric is an estimation based on the number of tokens sent and received in requests. We currently only calculate costs for OpenAI GPT. If you stream responses, we use a tokenizer package to estimate the number of tokens used in order to calculate the cost. The cost metric is meant to be a helpful estimation to analyze and predict cost trends, but you should always refer to your provider dashboard to see an accurate cost number.

Supported providers

Universal Endpoint

https://gateway.ai.cloudflare.com/v1/ACCOUNT_TAG/GATEWAY

AI Gateway offers multiple endpoints for each Gateway you create – one endpoint per provider, and one Universal Endpoint. The Universal Endpoint requires some adjusting to your schema, but supports additional features. Some of these features are, for example, retrying a request if it fails the first time, or configuring a fallback model/provider when a request fails.

You can use the Universal endpoint to contact every provider. The payload is expecting an array of message, and each message is an object with the following parameters:

provider: the name of the provider you would like to direct this message to. Can be openai/huggingface/replicateendpoint: the pathname of the provider API you’re trying to reach. For example, on OpenAI it can bechat/completions, and for HuggingFace this might bebigstar/code. See more in the sections that are specific to each provider.authorization: the content of the Authorization HTTP Header that should be used when contacting this provider. This usually starts with “Token” or “Bearer”.query: the payload as the provider expects it in their official API.

Workers AI

https://gateway.ai.cloudflare.com/v1/ACCOUNT_TAG/GATEWAY/workers-ai/

When making requests to Workers AI, replace https://api.cloudflare.com/client/v4/accounts/ACCOUNT_TAG/ai/run in the URL you’re currently using with https://gateway.ai.cloudflare.com/v1/ACCOUNT_TAG/GATEWAY/workers-ai.

Then add the model you want to run at the end of the URL. You can see the list of Workers AI models and pick the ID.

You’ll need to generate an API tokenOpen external link with Workers AI read access and use it in your request.

OpenAI

https://gateway.ai.cloudflare.com/v1/ACCOUNT_TAG/GATEWAY/openai

When making requests to OpenAI, replace https://api.openai.com/v1 in the URL you’re currently using with https://gateway.ai.cloudflare.com/v1/ACCOUNT_TAG/GATEWAY/openai.

HuggingFace

https://gateway.ai.cloudflare.com/v1/ACCOUNT_TAG/GATEWAY/huggingface

When making requests to HuggingFace Inference API, replace https://api-inference.huggingface.co/models/ in the URL you’re currently using with https://gateway.ai.cloudflare.com/v1/ACCOUNT_TAG/GATEWAY/huggingface. Note that the model you’re trying to access should come right after, for example https://gateway.ai.cloudflare.com/v1/ACCOUNT_TAG/GATEWAY/huggingface/bigcode/starcoder.

Replicate

https://gateway.ai.cloudflare.com/v1/ACCOUNT_TAG/GATEWAY/replicate

When making requests to Replicate, replace https://api.replicate.com/v1 in the URL you’re currently using with https://gateway.ai.cloudflare.com/v1/ACCOUNT_TAG/GATEWAY/replicate.

Tutorials

Deploy a Worker that connects to OpenAI via AI Gateway

Overview

In this tutorial, you will learn how to deploy a Worker that makes calls to OpenAI through AI Gateway. AI Gateway helps you better observe and control your AI applications with more analytics, caching, rate limiting, and logging.

This tutorial uses the most recent v4 OpenAI node library, an update released in August 2023.

Before you start

All of the tutorials assume you have already completed the Get started guide, which gets you set up with a Cloudflare Workers account, C3Open external link, and Wrangler.

1. Create an AI Gateway and OpenAI API key

On the AI Gateway page in the Cloudflare dashboard, create a new AI Gateway by clicking the plus button on the top right. You should be able to name the gateway as well as the endpoint. Click on the API Endpoints button to copy the endpoint. You can choose from provider-specific endpoints such as OpenAI, HuggingFace, and Replicate. Or you can use the universal endpoint that accepts a specific schema and supports model fallback and retries.

For this tutorial, we will be using the OpenAI provider-specific endpoint, so select OpenAI in the dropdown and copy the new endpoint.

You will also need an OpenAI account and API key for this tutorial. If you do not have one, create a new OpenAI account and create an API key to continue with this tutorial. Make sure to store your API key somewhere safe so you can use it later.

Changelog

2023-10-09

- Logs: Logs will now be limited to the last 24h. If you have a use case that requires more logging, please reach out to the team on Discord.

- Dashboard: Logs now refresh automatically.

- Docs: Fixed Workers AI example in docs and dash.

- Caching: Embedding requests are now cacheable. Rate limit will not apply for cached requests.

- Bugs: Identical requests to different providers are not wrongly served from cache anymore. Streaming now works as expected, including for the Universal endpoint.

- Known Issues: There’s currently a bug with costs that we are investigating.